Now you can try ‘the world’s best worst algorithm’

Whatever salacious content you’ve encountered on the internet today, I doubt anything will make you sweat like Fairly Intelligent™.

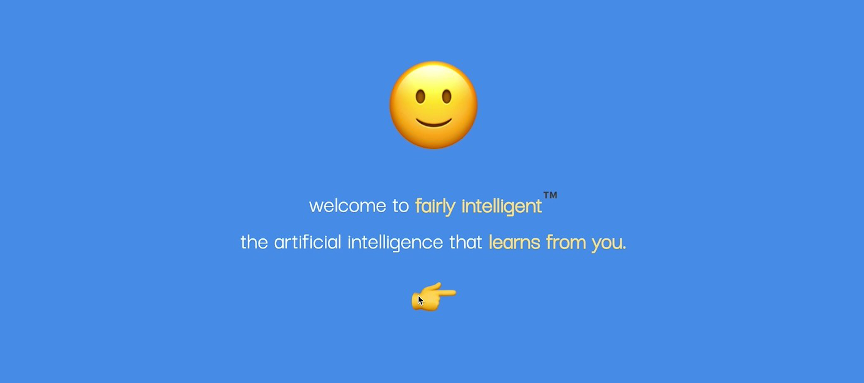

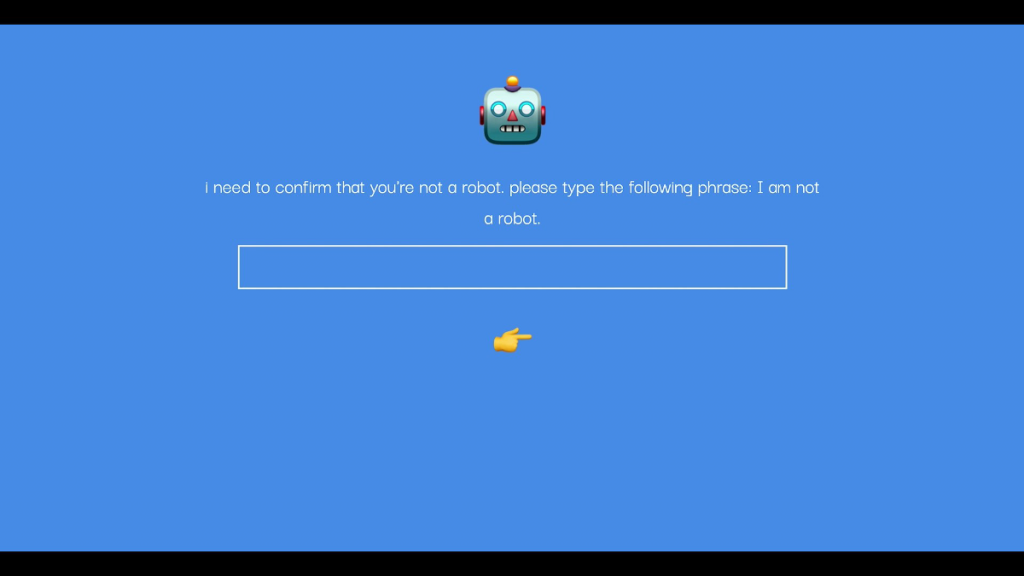

Artist and game maker A.M. Darke has created a speculative Artificial Intelligence to confront the biases innate in the algorithms that orchestrate our everyday lives. With a cheery smile emoji dominating the top of the page, the experience seems innocent enough: there’s a friendly, human-sounding narrator and simple clickable choices. But soon enough, the narration gets a little more biting, and the questions a little more butt-clenching – you may start checking over your shoulder to see if anyone is watching you make your selections.

Fairly Intelligent™ was commissioned by the Open Data Institute (ODI) as part of their Rules of Engagement show, curated by Antonio Roberts. The show aims to, as Roberts puts it, “make a case for ethical practices when working with data.” Fairly Intelligent™ launched on June 18, 2021. In an ODI Lunchtime Lecture hosted by Roberts (which you can watch on YouTube), Darke discusses how the work is meant to appear game-like and playful, like a seemingly innocuous quiz you might click through on Facebook that will reveal a flattering truth about your personality based on the wine you enjoy. But, as Darke walks us through a bit of the work, we see this façade crack.

“You have really straightforward questions…that are ‘yes’ or ‘no,’ but then one of the things that I like about this project, that was important to me, was having questions…where you don’t get to choose the option that really aligns with maybe your value system or what your beliefs are, and that the system actually forces you to choose a response that you may not like,” Darke says.

According to the speculative A.I., it is trying to determine if you’re the kind of person who should gain access to “the world’s best algorithm.” This will allow you to influence the system from the inside and make it work for everyone! Huzzah! Eager to start changing the world, I begin clicking. At the end of the work, I am slotted into a category based on my responses. My access is denied.

How exactly does Fairly Intelligent™ make its assessment? Sorry, it won’t tell you.

“It’s really important to me that I showed these factors, but that I also didn’t present too much context, too much to make it too…didactic,” Darke explains, “because I wanted to provide the user with the experience of knowing that they’ve been sorted into this category without having an obvious relationship to which question led to this kind of judgment.”

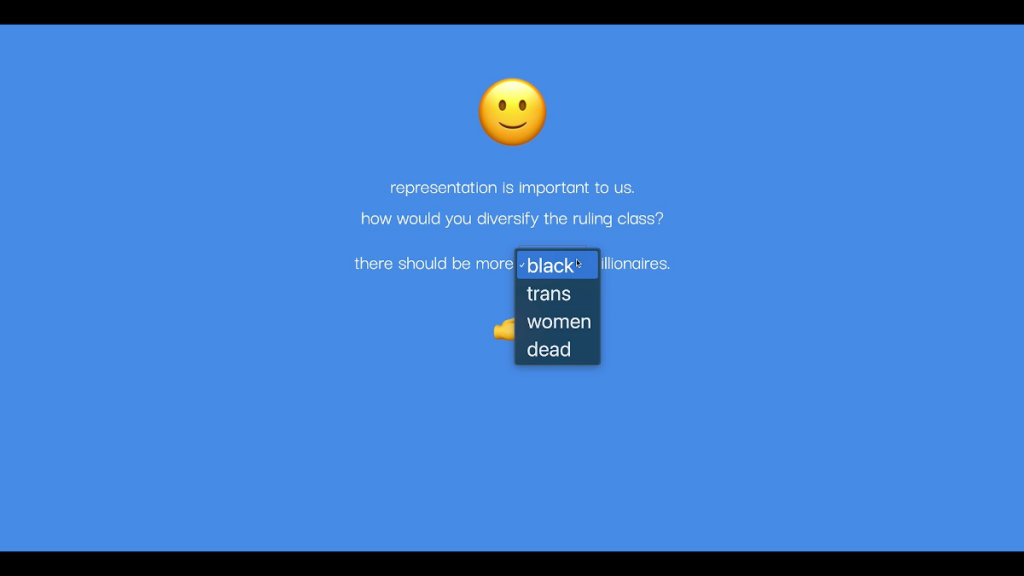

Indeed, we engage with these sorts of algorithms all the time and, as Darke emphasizes, questions that may seem “straightforward” are often “a proxy for something else, something that’s more useful for whoever has designed the system.”

Perhaps something like, based on data collected about mental health, who is likely to purchase a meditation app. Or asparagus water. “Asparagus water is a thing!” Darke laughs. (Apparently, it’s eight dollars and found at Whole Foods, which is really no surprise.)

With Fairly Intelligent™, Darke hopes to go further than “awareness.” We all know our data is being collected and sold. So rather than performing the limp gesture of “spreading awareness,” Fairly Intelligent™ demands that we think: think skeptically, think critically, and then open a dialogue about what algorithms that work for everyone’s benefit might look like.

One solution that is widely thrown around boils down to, essentially, more data. Specifically, more diverse data. But, Darke asks, is the way forward so simple? Will continuing to affirm systems of mass surveillance by feeding them more and more data, however “inclusive,” push us towards algorithmic fairness?

Likely not, says Darke. A more diverse data pool presents risks. Darke uses examples like algorithms identifying protesters, or tracking and oppressing a particular demographic, or a for-profit college preying on individuals an algorithm has assessed as lonely, depressed, and desperate.

“Companies who are saying, ‘I’m so invested in diversity! What we need is just, like, more Black women coders, and that’s the answer!’ It’s like, I don’t need more queer folks working on drone piloting software. That’s not the answer,” says Darke.

“Something that seems reasonable and interesting to explore is the idea of creating smaller, localized algorithms,” Darke continues. “That the first question when you create something like this is not, you know, how do we get more data, but why do we need this data, and how will it serve the community, and do people have consent, has everyone fully opted in, can they opt out at any time, can they withdraw that consent? And not just…is it causing harm after the fact, but, first of all, how can it best serve the community?”

Darke also encourages pushing for regulation of these systems (think the EU’s General Data Protection Regulation), penalties for violations of these regulations, and complete transparency on data collection and its specific usage.

So, if your day is lacking a little sweat (or a little laughter), give Fairly Intelligent™ a go (it does not collect, store, or share your data in any way).

Find out more about A.M. Darke on their website.

*

All images courtesy of the artist, The Open Data Institute, and PR representative Binita Walia.

Share this Post